BIML co-founder Gary McGraw joins an esteemed panel of experts to discuss Machine Learning Security in Dublin Thursday October 3rd. Participation requires registration. Please join us if you are in the area.

BIML co-founder Gary McGraw joins an esteemed panel of experts to discuss Machine Learning Security in Dublin Thursday October 3rd. Participation requires registration. Please join us if you are in the area.

On the back of our LAWFARE piece on data feudalism, McGraw did a video podcast with Decipher and his old friend Dennis Fisher. Have a look.

Dan Geer came across this marketing thingy and sent it over. It serves to remind us that when it comes to ML, it’s all about the data.

Take a look at this LAWFARE article we wrote with Dan about data feudalism.

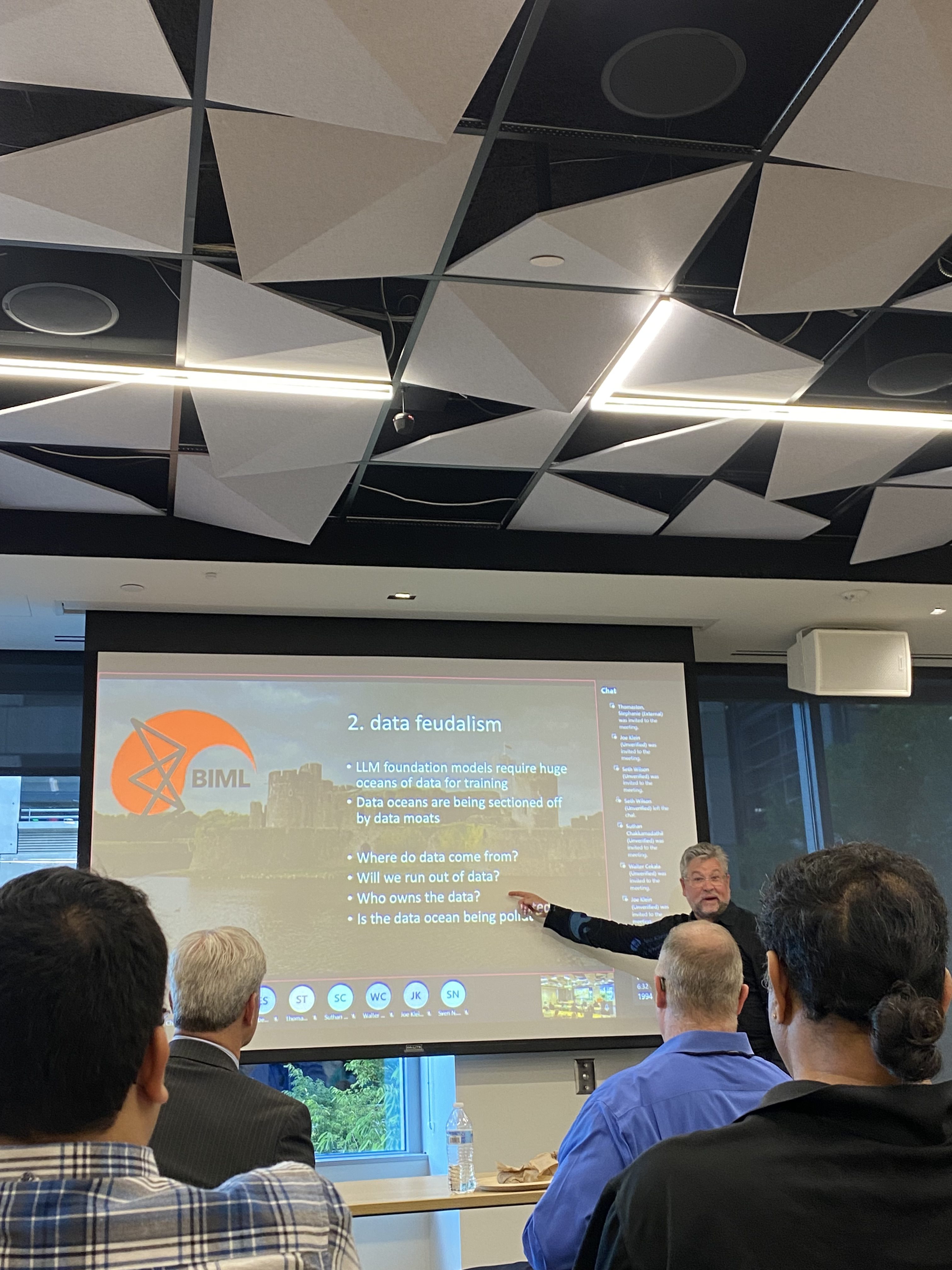

Welcome to the era of data feudalism. Large language model (LLM) foundation models require huge oceans of data for training—the more data trained upon, the better the result. But while the massive data collections began as a straightforward harvesting of public observables, those collections are now being sectioned off. To describe this situation, consider a land analogy: The first settlers coming into what was a common wilderness are stringing that wilderness with barbed wire. If and when entire enormous parts of the observable internet (say, Google search data, Twitter/X postings, or GitHub code piles) are cordoned off, it is not clear what hegemony will accrue to those first movers; they are little different from squatters trusting their “open and notorious occupation” will lead to adverse possession. Meanwhile, originators of large data sets (for example, the New York Times) have come to realize that their data are valuable in a new way and are demanding compensation even after those data have become part of somebody else’s LLM foundation model. Who can gain access control for the internet’s publicly reachable data pool, and why? Lock-in for early LLM foundation model movers is a very real risk.

BIML coined the term data feudalism in our LLM Risks document (which you should read). Today, after a lengthy editing cycle, LAWFARE published an article co-authored by McGraw, Dan Geer, and Harold Figueroa. Have a read, and pass it on.

https://www.lawfaremedia.org/article/why-the-data-ocean-is-being-sectioned-off

BIML enthusiasts may be interested in this, which co-founder Gary McGraw participated in.

Deciphering AI: Unpacking the Impact on Cybersecurity By Lindsey O’Donnell-Welch

Also features Phil Venables CISO of Google Cloud and Nathan Hamiel from Blackhat.

Here’s the Decipher landing page where the event tomorrow will be livestreamed: https://duo.com/decipher/deciphering-ai-unpacking-the-impact-on-cybersecurity

It will also be livestreamed on the Decipher LinkedIn page: https://www.linkedin.com/events/decipheringai-unpackingtheimpac7207405768856219648/theater/

Streaming will begin July 11 at 2pm ET.

In May we were invited to present our work to a global audience of Google engineers and scientists working on ML. Security people also participated. The talk was delivered via video and hosted by Google Zurich.

A few hundred people participated live. Unfortunately, though the session was recorded on video, Google has requested that we not post the video. OK Google. You do know what we said about you is what we say to everybody about you. Whatever. LOL.

Here is the talk abstract and a bio for McGraw who did the presentation. If you would like to host a BIML presentation for your organization, get in touch.

10, 23, 81 — Stacking up the LLM Risks: Applied Machine Learning Security

I present the results of an architectural risk analysis (ARA) of large language models (LLMs), guided by an understanding of standard machine learning (ML) risks previously identified by BIML in 2020. After a brief level-set, I cover the top 10 LLM risks, then detail 23 black box LLM foundation model risks screaming out for regulation, finally providing a bird’s eye view of all 81 LLM risks BIML identified. BIML’s first work, published in January 2020 presented an in-depth ARA of a generic machine learning process model, identifying 78 risks. In this talk, I consider a more specific type of machine learning use case—large language models—and report the results of a detailed ARA of LLMs. This ARA serves two purposes: 1) it shows how our original BIML-78 can be adapted to a more particular ML use case, and 2) it provides a detailed accounting of LLM risks. At BIML, we are interested in “building security in” to ML systems from a security engineering perspective. Securing a modern LLM system (even if what’s under scrutiny is only an application involving LLM technology) must involve diving into the engineering and design of the specific LLM system itself. This ARA is intended to make that kind of detailed work easier and more consistent by providing a baseline and a set of risks to consider.

Gary McGraw, Ph.D.

MASTODON @cigitalgem@sigmoid.social

Gary McGraw is co-founder of the Berryville Institute of Machine Learning where his work focuses on machine learning security. He is a globally recognized authority on software security and the author of eight best selling books on this topic. His titles include Software Security, Exploiting Software, Building Secure Software, Java Security, Exploiting Online Games, and 6 other books; and he is editor of the Addison-Wesley Software Security series. Dr. McGraw has also written over 100 peer-reviewed scientific publications. Gary serves on the Advisory Boards of Calypso AI, Legit, Irius Risk, Maxmyinterest, and Red Sift. He has also served as a Board member of Cigital and Codiscope (acquired by Synopsys) and as Advisor to CodeDX (acquired by Synopsys), Black Duck (acquired by Synopsys), Dasient (acquired by Twitter), Fortify Software (acquired by HP), and Invotas (acquired by FireEye). Gary produced the monthly Silver Bullet Security Podcast for IEEE Security & Privacy magazine for thirteen years. His dual PhD is in Cognitive Science and Computer Science from Indiana University where he serves on the Dean’s Advisory Council for the Luddy School of Informatics, Computing, and Engineering.

BIML turned out in force for a version of the LLM Risks presentation at ISSA NoVa.

BIML showed up in force (that is, all of us). We even dragged along a guy from Meta.

The ISSA President presents McGraw with an ISSA coin.

Though we appreciate Microsoft sponsoring the ISSA meeting and lending some space in Reston, here is what BIML really thinks about Microsoft’s approach to what they call “Adversarial AI.”

No really. You can’t even begin to pretend that “red teaming” is going to make anything better with Machine Learning Security.

Team Dinner.

Here is the abstract for the LLM Risks talk. We love presenting this work. Get in touch.

10, 23, 81 — Stacking up the LLM Risks: Applied Machine Learning Security

I present the results of an architectural risk analysis (ARA) of large language models (LLMs), guided by an understanding of standard machine learning (ML) risks previously identified by BIML in 2020. After a brief level-set, I cover the top 10 LLM risks, then detail 23 black box LLM foundation model risks screaming out for regulation, finally providing a bird’s eye view of all 81 LLM risks BIML identified. BIML’s first work, published in January 2020 presented an in-depth ARA of a generic machine learning process model, identifying 78 risks. In this talk, I consider a more specific type of machine learning use case—large language models—and report the results of a detailed ARA of LLMs. This ARA serves two purposes: 1) it shows how our original BIML-78 can be adapted to a more particular ML use case, and 2) it provides a detailed accounting of LLM risks. At BIML, we are interested in “building security in” to ML systems from a security engineering perspective. Securing a modern LLM system (even if what’s under scrutiny is only an application involving LLM technology) must involve diving into the engineering and design of the specific LLM system itself. This ARA is intended to make that kind of detailed work easier and more consistent by providing a baseline and a set of risks to consider.

BIML wrote an article for IEEE Computer describing 23 Black Box Risks found in LLM Foundation models. In our view, these risks determine perfect targets for government regulation of LLMs. Have a read. You can also fetch the article from the IEEE.

CalypsoAI produced a video interview in which I hosted Jim Routh and Neil Serebryany. We talked all about AI/ML security at the enterprise level. The conversation is great. Have a listen.

I am proud to be an Advisor to CalyopsoAI, have a look at their website.