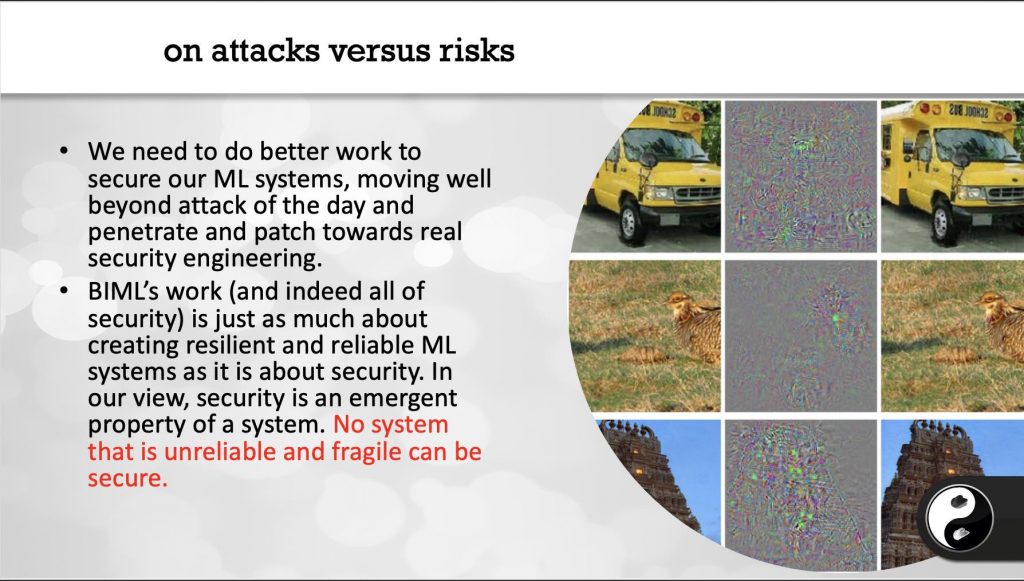

An important part of our mission at BIML is to spread the word about machine learning security. We’re interested in compelling and informative discussions of the risks of AI that get past the scary sound bite or the sexy attack story. We’re proud to continue the bi-monthly video series we’re calling BIML in the Barn.

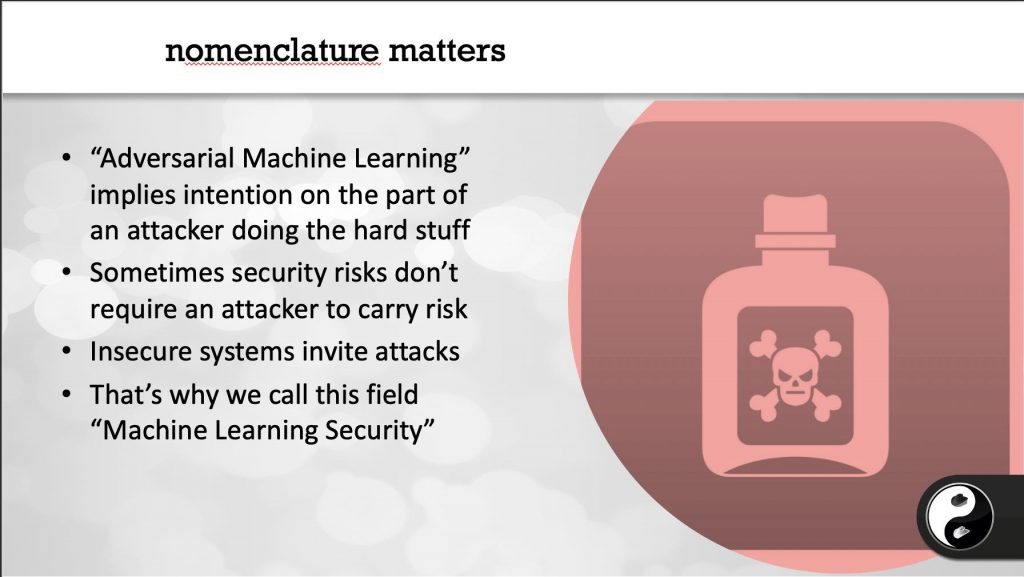

Our third video talk features Ram Shankar Siva Kumar a researcher at Microsoft Azure working on Adversarial Machine Learning. Of course, we prefer to call this Security Engineering for Machine Learning. Lots of good stuff in this talk about regulation, compliance, security, and privacy.

Ram ponders, “why is your toaster more trustworthy than your self-driving car?”

Here’s Ram!